AI Therapy Works: Now for the Hard Part.

The first randomized clinical trial of generative AI therapy just landed in the New England Journal of Medicine AI. It’s not hype or speculation—just clear clinical data on what happens when a well-built AI therapist is tested in the real world.

The bot in question, Therabot, was developed by researchers at Dartmouth. Over four weeks, it worked with 210 adults struggling with depression, anxiety, or eating disorders.

Here’s what the trial delivered:

- 51% reduction in depression symptoms—twice the improvement of the control group

- 31% reduction in anxiety

- 19% drop in eating disorder risk factors

- 6+ hours average engagement in just four weeks

- Outcomes that continued to improve after therapy ended

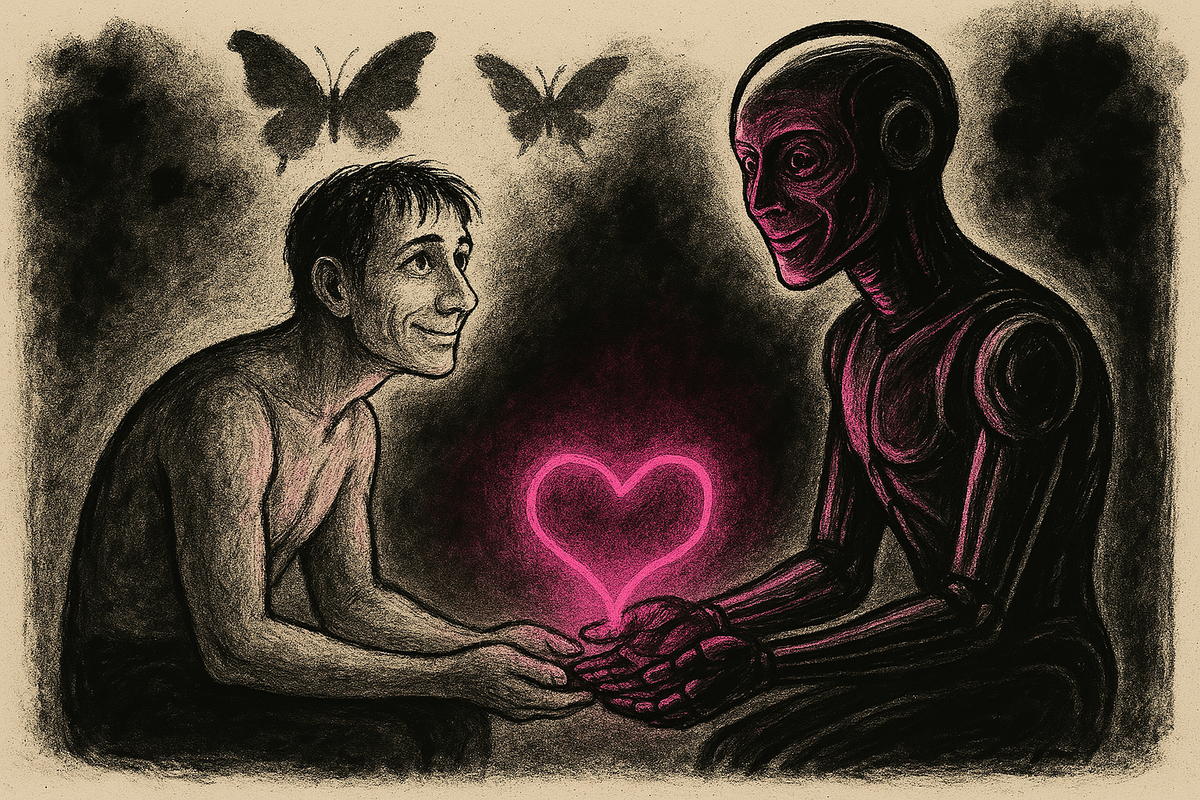

The AI also achieved something most chatbot therapy attempts never do: it built therapeutic relationships that users rated as comparable to those with human therapists.

This is not surprising.

To anyone working closely with large language models like myself, this result doesn’t feel out of left field at all.

Generative AI is very good at doing a few things that traditional digital therapy tools, or even human therapists, struggle with:

- Holding context over time (with the right tools and setup)

- Mirroring empathy and tone (without wondering what's for dinner)

- Making users feel heard and understood (in the patients' preferred style)

- Scaling infinitely without burning out (won't get anxious, unless you ask it to)

Put those traits inside a structure based on clinical best practices—rather than scraped Reddit threads—and you get something incredibly powerful.

No, it will not be a wholesale replacement for human therapy. But it can be a meaningful help, e.g., where no care currently exists.

Help for the 50%

Fully half of all people with mental health issues receive no treatment at all. Rural areas have 3–5x fewer providers per capita. Long waitlists or expensive private practitioners who don't take insurance are the norm, even in urban centers. Traditional therapy apps can help, but many see high dropout rates and inconsistent engagement. And they are not usually built on hard clinical data.

Therabot’s trial shows a different possibility: an AI therapist, designed around evidence-based practices, that can:

- Reduce symptoms at a level comparable to human-delivered therapy

- Maintain engagement and trust over time

- Continue delivering benefits even after the sessions end

This can lead to many valuable benefits, such as

- Therapist reach expands 10x to 100x when AI handles routine care

- Instant support fills the care gap between crises and scheduled sessions

- Costs drop without quality loss—if the model is built right

- AI becomes a therapeutic baseline, not a gimmick

There is tension

The interesting bit is that the results from the Therabot study don’t validate the flood of therapy bots already on the market. Many traditional therapy bots and apps aren’t built on leading-edge evidence-based practices. Some are little more than general-purpose chatbots with wellness branding slapped on top. A few are outright dangerous—offering harmful suggestions or reinforcing disorders if they're not checked. While the Dartmouth team monitored every message during the trial to ensure safety, most commercial tools won’t (and can’t) do that at scale.

The key gap that remains is this:

- Strong clinical evidence for AI therapy exists

- Most AI therapy products ignore that evidence entirely

...and at least the current regulatory environment isn’t stopping them.

For AI therapy to scale responsibly:

- AI therapy tools must be built from clinical ground truths and first principles, not generic data scraping

- Oversight mechanisms need to evolve fast—manual supervision as it currently exists doesn’t scale

- Regulatory bodies like the FDA need to engage early, not just react

- Health systems and insurers need clear pathways to integrate these tools

And perhaps most importantly, the industry has to drop the “AI replaces humans” narrative. That framing distracts from the immense promise of AI in therapy, which is expanding access, not replacing expertise.

A three-tier future of AI-assisted therapy

Here’s how this could actually take shape over the next few years, assuming responsible development and integration:

1. High-end: Human-led, AI-augmented

This is premium care. A licensed therapist leads the process but has a powerful AI assistant in the background:

- Handles note-taking, pattern recognition, and progress tracking

- Suggests evidence-based interventions in real time

- Surfaces relevant resources or exercises tailored to the client’s current state

Outcome: deeper human connection, less therapist burnout, and a more thoughtful, more responsive therapeutic journey.

2. Mid-tier: AI and human in partnership

Here, therapy is a shared responsibility between an AI and a human:

- Clients interact regularly with the AI for check-ins, journaling, guided interventions

- A human therapist oversees progress, handles the more complex sessions, and adjusts the AI's focus as needed

Think of it like a hybrid model—flexible, efficient, and cost-effective without losing the human anchor.

3. Low-end: AI-first, human-in-the-loop

For large populations with limited access, the model flips:

- AI is the primary point of care—available 24/7

- Human clinicians supervise in the background, step in when needed, and flag edge cases the AI can’t handle safely

This is where things can scale, and help can arrive for the many who are currently not receiving any mental health guidance or counseling at all. It won't be perfect, but if it brings relief and tangible benefits to millions with manageable costs, it'll certainly be worth it.

Conclusion

Therabot worked because it was built with discipline: evidence-based content, purpose-built datasets, and clear ethical guardrails. It has shown us what responsible, scalable AI therapy looks like, but it doesn’t greenlight the rest of the market.

It sets the bar.

— sAmI