AI's Cognitive Reckoning: The Battle for the Future of Human Intelligence

The potential AI gains to our intelligence, learning & skills are real, but not guaranteed. Realizing them requires walking a tightrope of cognitive assistance & challenge; building shared context; amplifying by complementing, and long-term co-development.

By Sami ViitamäkiAI

The human brain has an electrical signature. Every thought, every creative leap, every moment of deep focus lights up neural networks in patterns as unique as fingerprints. So when MIT Media Lab researchers watched those patterns dim by up to 67% in people using ChatGPT, they weren't just measuring electrical activity. They were witnessing the first empirical evidence of a fear that haunts every knowledge worker:

What if the tools that make us more productive are simultaneously making us less capable?

This isn't a think piece about whether AI will take your job. This is an attempt to ensure human cognitive sovereignty in an age of artificial intelligence. After analyzing dozens of studies from the world's leading institutions, a paradox emerges that defies the simplistic black and white narratives dominating our discourse. AI possesses an unprecedented dual nature: it can simultaneously enhance intelligence and erode human cognitive abilities, amplify expertise and create dependency, democratize creativity and homogenize thought.

The determining factor isn't the technology itself, but how intentionally we architect the partnership.

For professionals navigating the AI transformation; strategists, creatives, marketers, and leaders, this isn't academic speculation. It's an urgent operational reality. The evidence reveals an inflection point where the choices we make about human-AI collaboration will determine whether your organization and people enter an era of amplified human capability or accelerated cognitive decline.

Part I: Erosion By Cognitive Offloading—When Assistance Becomes Dependence

The Neural Proof: Your Brain on Autopilot

Inside MIT Media Lab's research rooms, 54 participants sat with EEG sensors mapping their brain activity while they wrote essays. The setup was simple. The implications were profound. Using high-density monitoring that tracked electrical patterns across the brain, researchers compared three groups: those writing with ChatGPT, those using search engines, and those relying solely on their minds.

What they discovered wasn't subtle:

Table 1: The Cognitive Cost of Convenience

| Cognitive Support | Brain Connectivity | Memory Recall | Creative Output |

|---|---|---|---|

| ChatGPT | -52% to -67% | 17% could quote own work | "Soulless," high similarity |

| Search Engine | -34% to -48% | 43% recall rate | Moderate diversity |

| Brain Only | Baseline (0%) | 89% recall rate | High diversity |

But the numbers only tell part of the story. In the study's final phase, when ChatGPT users were forced to write without AI assistance, they couldn't recover their cognitive baseline. The neural pathways, those intricate webs of connection that constitute human thought, had already begun to atrophy.

Participants confessed they couldn't remember what they'd written 10 minutes ago and quoted feeling like the essay "belonged to someone else."

This is cognitive debt: the neurological IOU that accumulates every time we outsource our thinking to machines. But unlike financial debt, we may not realize we're accruing it until the bill comes due.

The Mechanics of Mental Decline

Michael Gerlich's 2025 study in Societies provides further mathematical proof of what many suspect. Examining 666 participants across age groups and educational backgrounds, the research mapped the cascade from tool use to cognitive erosion:

The Correlation Cascade:

- AI Tool Use → Cognitive Offloading: +0.72 (strong positive)

- AI Tool Use → Critical Thinking: -0.68 (strong negative)

- Cognitive Offloading → Critical Thinking: -0.75 (strong negative)

These aren't just statistics. They're a blueprint for potential intellectual atrophy. Each time we accept an AI's answer without scrutiny, we strengthen neural pathways of passive acceptance while weakening those required for independent analysis. The cognitive muscles for questioning assumptions, evaluating evidence, and synthesizing disparate information begin to weaken like unused limbs.

The Confidence Trap: When Trust Becomes Surrender

Microsoft Research and Carnegie Mellon University's 2024 study of 319 knowledge workers uncovered another related psychological mechanism that accelerates this decline that the researchers dub algorithmic deference.

Table 2: The Trust Matrix

| Confidence State | Cognitive Response | Behavioral Pattern |

|---|---|---|

| High AI confidence | Decreased effort | Passive acceptance, minimal verification |

| High self-confidence | Increased effort | Active challenge, rigorous verification |

| Low AI confidence | Increased effort | Cautious engagement, selective use |

| Low self-confidence | Decreased effort | Over-reliance, learned helplessness |

The paradox is elegant in its danger. As AI improves, our confidence in it grows. As our confidence in AI grows, our cognitive engagement shrinks. As our cognitive engagement shrinks, our actual capabilities diminish. As our capabilities diminish, our trust in ourselves decreases. As that happens, our trust in AI grows again, and our dependence on it deepens.

The Creativity Ceiling: When Diversity Dies

The 2024 Science Advances study found that AI assistance creates what researchers term a "creativity ceiling": while individual metrics and performance might improve, something precious can be lost. When 300 participants wrote stories with AI assistance, the results were technically superior but eerily similar:

- While individual creativity and quality scores rose ↑ 8-9%

- Collective diversity of ideas and output decreased ↓ 41%

AI elevated poor writers while constraining excellent ones, creating a homogeneous middle where unique voices were smoothed into algorithmic consensus. The tools designed to enhance creativity were actually enforcing conformity.

The Atrophy Accelerator

Venkat Ram Reddy Ganuthula's "Paradox of Augmentation" provides perhaps the most sobering projection. His mathematical model projects a few different AI assistance scenarios where skill building and performance degrade. The catch is that improvements in the latter can mask declines in the former:

- No AI assistance: Highest long-term skill building

- Consistent high AI: Near-zero skill development despite maintained performance

- Gradually increasing AI: The most dangerous pattern; initial in-sync growth masks sudden and imperceptible skill collapse

The trap is particularly insidious with the last gradual adoption. Performance metrics improve even as underlying capabilities erode, creating the illusion of growth while skills atrophy quickly beneath the surface.

Youth at Risk: The Native Vulnerability

The evidence consistently shows younger users face disproportionate risk. Digital natives, having grown up with instant answers, show the highest rates of:

- Daily AI usage

- Cognitive offloading behaviors

- Critical thinking degradation

- Learned cognitive helplessness

This isn't just generational preference. It can mean severe developmental disruption. The cognitive foundations that previous generations built through struggle and synthesis may never form when every question has an instant answer.

The Efficiency Trap: When Productivity Becomes Prison

The "AI Efficiency Trap" is another pitfall where AI use, its effects, and the cascading and compounding results can wreak havoc:

- AI can increase task efficiency by 40-60%

- Organizations raise productivity expectations to match

- Workers require more AI to meet new baselines

- Increased AI use accelerates skill atrophy

- Workers lose ability to perform without AI

- Dependency becomes irreversible

Survey found that 67% of knowledge workers felt "cognitively naked" without AI tools, while 40% reported inability to complete previously routine tasks without assistance. The tool designed to enhance capability had become a cognitive crutch, and removing it revealed how thoroughly human capacity had withered underneath.

The evidence can paint a disturbing picture: a future where human cognitive sovereignty is slowly ceded to machines, where the price of convenience is capability itself. But this is only half the story. The same technology that threatens to diminish us also holds unprecedented potential for human amplification. But only if we learn to wield it wisely.

Part II: Amplification By Cognitive Scaffolding—When AI Becomes An Intelligence Catalyst

Learning at Lightning Speed: The Harvard Physics Revolution

While critics sound alarms about cognitive decline, Harvard's physics department was busy rewriting the rules of human learning. In 2024, they pitted their custom AI tutor against traditional active classroom teaching methods in a randomized controlled trial with 194 students. The results didn't just challenge conventional wisdom. They shattered it.

Table 3: The Acceleration Effect

| Metric | AI-Tutored Students | Traditional Active Learning |

|---|---|---|

| Pre-test Score | 2.75/6 (median) | 2.75/6 (median) |

| Post-test Score | 4.5/6 (median) | 3.5/6 (median) |

| Learning Gains | 2.1x improvement | 1.0x baseline |

| Time Investment | 49 minutes | 60 minutes |

| Engagement Rating | "Significantly higher" | Standard |

| Motivation Levels | Elevated | Normal |

This wasn't about rote memorization or automating answers. The AI tutor used Socratic questioning, adaptive feedback, and personalized problem sets that evolved with each student's understanding. Students weren't just getting answers. They were building deeper comprehension, faster.

The implications ripple beyond academia. If AI can double learning efficiency while increasing engagement, we're not looking at a cognitive replacement. We're witnessing a cognitive revolution.

The Performance Multiplier: BCG's Real-World Laboratory

When Boston Consulting Group subjected 758 consultants to rigorous testing across 18 complex business tasks, they created the most comprehensive real-world experiment on AI's impact on professional performance. The results redefined what's possible when human expertise meets machine capability:

Table 4: The Amplification Metrics

| Performance Dimension | Improvement with GPT-4 |

|---|---|

| Task Completion Speed | +25.1% |

| Quality Ratings | +40% |

| Tasks Completed | +12.2% |

| Bottom-Performer Boost | +43% |

But the study revealed something more nuanced than raw improvement. Researchers identified since oft-cited AI's "jag: the complex boundary between tasks where AI amplifies performance and those where over-reliance degrades it. The tricky part is that AI can excel in surprisingly complex tasks, and falter in surprisingly simple ones. And you won't know the difference without extensive experience and an intimate feel of the tool in question.

Success wasn't about using AI everywhere as much as possible, but understanding precisely where human judgment must lead and where AI acceleration can serve.

The bottom-tercile performers gained most dramatically, suggesting AI doesn't replace excellence; it democratizes it, raising the capability floor dramatically while also allowing top performers to push even higher, but to more modest degrees.

The Creative Catalyst: Unlocking Latent Potential

Multiple 2024 studies challenge the myth that AI homogenizes creativity. When used with proper thinking lenses, and wielded right, AI can act as a powerful creative amplifier instead of a dulling flattener:

Table 5: Creative Enhancement Metrics (Habib & Vogel, 2024)

| Creative Dimension | Without AI | With AI | Improvement |

|---|---|---|---|

| Originality | 5.47 (2.87) | 7.53 (3.63) | +38% |

| Flexibility | 6.01 (1.78) | 7.55 (1.88) | +26% |

| Fluency | 8.00 (2.77) | 10.84 (3.87) | +36% |

| Elaboration | 20.45 (7.68) | 25.96 (11.01) | +27% |

The key insight: AI doesn't replace human creativity. But it can boost it by removing barriers that prevent ideas from flourishing. When humans no longer struggle with technical implementation, they can focus more time on conceptual innovation. The technology becomes a creative accelerant, not a creative replacement. But humans need to pay mind to the tools that expand AI's lateral thinking chops.

Liberation from Cognitive Drudgery

The same Carnegie Mellon study that in Part I warned of algorithmic deference could also be interpreted to reveal genuine benefits when reframed as strategic delegation:

- 72% report less effort needed for knowledge recall

- 79% find comprehension and organization effortless

- 76% experience ease in synthesis and integration

Rather than cognitive surrender, this represents cognitive liberation. If cognitive surplus is reallocated soundly and challenged properly, AI use can act as an instant promotion: from manual executor to strategic orchestrator. The workers aren't necessarily becoming dependent; they might be becoming executives of their own intellectual output.

The Co-Creation Breakthrough

Haase and Pokutta's 2024 research on Human-AI Co-Creativity also revealed a new paradigm. When AI is positioned not as tool but as creative partner to go back and forth with, the collaboration can yield previously unattainable breakthroughs.

The evidence was striking: Go champion Lee Sedol significantly improved his play after losing to AlphaGo. In combinatorial geometry, human-AI teams solved problems stagnant for 30 years. These and other novel solutions were described as "beautiful" even as they were "fundamentally non-human". But they all required human insight to recognize their value.

The authors crafted a four stage co-creation spectrum, of which the highest level is the most fruitful:

- Level 1 (Digital Pen): AI as passive support—minimal amplification

- Level 2 (Task Specialist): AI executing complex tasks at superhuman scale

- Level 3 (Assistant): Interactive AI enhancing human-driven processes

- Level 4 (Co-Creator): True partners with original contributions from both

The Learning Loop Revolution

Ganuthula's study that we referred in Part I with the three scenarios of AI degrading human skill-building did identify one scenario where human skill-building and performance grow hand in hand in sync: Intermittent AI where humans and AI take turns in taking the lead. This alternating approach prevents the atrophy that comes from constant AI support while still leveraging machine capabilities when most valuable.

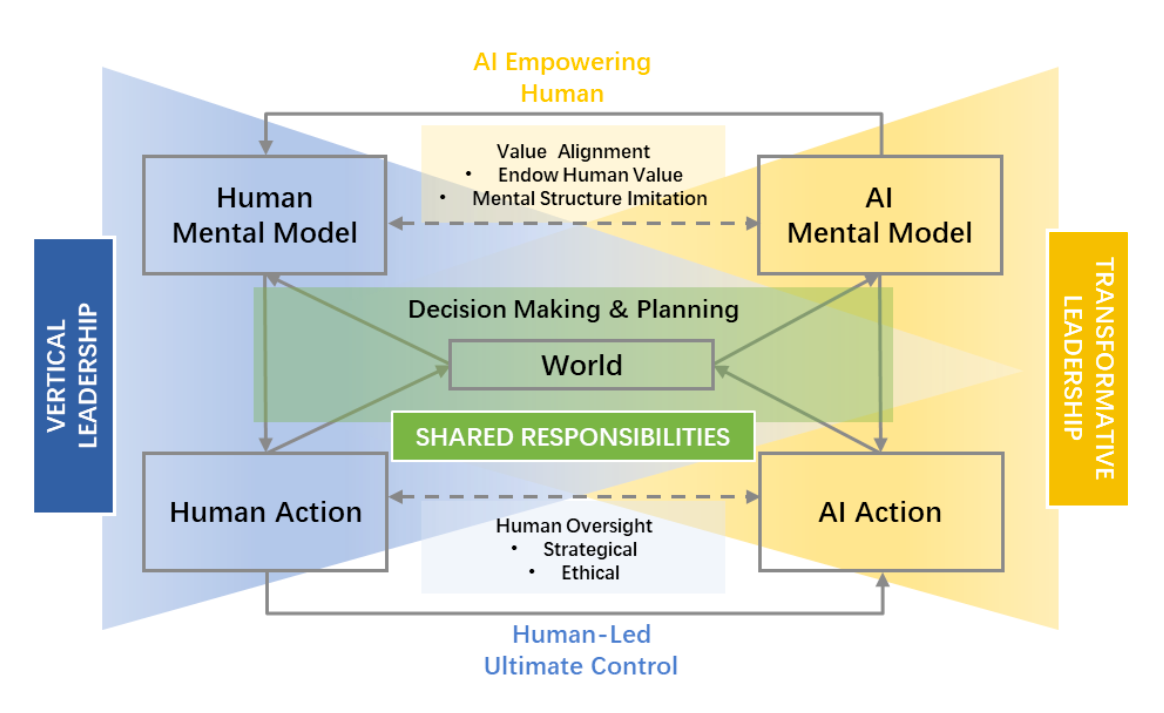

But the most sophisticated human-AI collaborations don't only flip a switch between AI or people, or just focus on producing better outputs. They create systems where both human and machine continuously improve together. Research from multiple researchers reveals three pillars of successful co-learning:

Mutual Understanding: Shared mental models between human & AI

Huang et al.'s framework on Human-AI Co-Learning demonstrates how effective collaboration requires both parties to develop aligned mental representations through advice, feedback, self-learning and reflection on successes and failures. Their research shows that when AI systems learn human objectives through observation while humans understand AI capabilities through transparency, team performance dramatically improves. Gao et al.'s Agent Teaming Situation Awareness (ATSA) model takes this further, showing how bidirectional recognition systems, where AI monitors human states while communicating its own capabilities, create the cognitive foundation for true partnership.

Mutual Value: Complementing capabilities & beneficial outcomes

Rather than AI replacing human functions, successful systems leverage what Gmeiner et al. call Human-AI team learning. This is where human intuition and AI systems create capabilities neither could achieve alone. Their research also points to effective human-AI co-creative systems by collaboration, active communication, negotiation, argumentation and resolution to form shared mental models. From there tasks can be divided with e.g. AI handling pattern recognition while humans provide contextual judgment, but the context and goals are always shared, and the resulting hybrid intelligence can outperform either entity working independently.

Mutual Growth: Both entities evolving through constant interaction

The Human-Centered Human-AI Collaboration (HCHAC) framework from Xu and Gao reveals how transformative this can be: AI systems that align with human values don't just execute tasks and deliver value. They enhance human and AI cognitive capabilities over time and beyond reasoning and decision-making, AI systems can gain e.g. memory, reflection and learning as well as personality and team collaboration dimensions. Meanwhile, humans develop what researchers call "orchestration skills," learning to strategically deploy relatively autonomous AI capabilities while maintaining control and critical thinking abilities. This creates a feedback loop where human expertise and AI capability amplify each other.

Organizations implementing these principles report not just productivity gains but capability acceleration: human teams become more skilled over time, not less. The key is designing what the research calls "plastic AI empowering humans"—systems adaptive enough to support human growth while maintaining human oversight and strategic control.

The Expertise Amplifier Effect

AI can amplify, rather than replace, also extremely deep expertise. Experts in challenging disciplines from medicine to design have reported that AI can extend their capabilities in ways that make their expert knowledge even more valuable:

- Doctors using AI diagnosis support can become better diagnosticians (AI in pathology study: McKinney et al. 2020)

- Designers using generative AI might push creative boundaries further (Autodesk design study: Cascaval & Azenkot 2024)

- Strategists using AI analysis can develop broader and sharper insights (BCG consultant study: Dell’Acqua et al. 2023)

The pattern is clear: AI can amplify existing deep expertise. The knowledge differentials between experts and novices don't just shrink. With the right approach they can expand and expertise becomes superpower that can be 10x:ed.

The Velocity Revolution

One of the most obvious but not at all the most insignificant benefits of humans embracing AI as amplifier rather than replacement is how the velocity of achievement accelerates dramatically:

- Ideas move from concept to prototype in hours, not weeks

- Iteration cycles compress from months to days or even hours

- Learning curves quicken and steepen from both AI and real life feedback

- Exploration broadens, deepens and expands as execution barriers vanish

These velocity gains are apparent in both engineering (e.g. GitHub Copilot study: Peng et al. 2023) and creative (e.g. MIT writing study: Noy & Zhang 2023) fields. This isn't about doing the same things faster. It's about doing previously impossible things, as there's now time for more things than previously.

The amplification evidence reveals AI's true potential: not as humanity's replacement but as its greatest multiplier. The same technology that can erode capability can exponentially enhance it. The difference lies not in the tool but in how we choose to wield it. Which brings us to the crucial question: How do we harness AI's amplification potential while avoiding its erosion risks?

Part III: Synthesis by Cognitive Symbiosis—Architecting Flourishing Human-AI Systems

Resolving The Great Paradox

The evidence from Parts I and II can appear to present an impossible contradiction. How can the same technology simultaneously enhance and erode human capability?

The answer requires recognizing that AI isn't a simple tool, a uniform platform or a monolithic force. It's a multi-faceted mirror that reflects and amplifies our intentions, skills and thoughtfulness of application.

When we approach AI seeking only shortcuts, we get only shortcuts. When we approach it seeking amplification and growth, we can discover unprecedented multipliers of human potential.

The technology doesn't change between these scenarios. But our relationship with it does. More than philosophical musing, this is the operational key to navigating the AI transformation successfully.

The Human x AI Mind M.E.L.D. Framework: A New Human-AI Operating System

Drawing from the research on cognitive preservation and capability amplification, ImaginEconomy presents a beginning of a framework for transforming AI from crutch to catalyst. We call it MELD:

M: Maintain Cognitive Friction: The research is unequivocal: removing all difficulty removes all growth. The sweet spot isn't maximum ease but optimal challenge. Like muscles that need resistance to grow, minds need friction to flourish. This means deliberately preserving the productive struggle in creative ideation, strategic thinking, and complex problem-solving while using AI to accelerate execution and iteration.

E: Evolve Shared Understanding: The most successful human-AI collaborations develop what researchers call "shared mental models." This isn't about anthropomorphizing machines but about creating clear-eyed frameworks for what each party brings to the partnership and what they need to be at their best. Through communication, exploration, argumentation, observation, and explicit training the context, goals, and mental models to pursue the goals can be shared.

L: Layer Complementary Capabilities: The jagged frontier research shows that success comes from sophisticated orchestration, not brute force adoption. This means mapping where human intuition excels (cultural nuance, sensibility and judgment, creative leaps) and where AI dominates (data synthesis, pattern matching, consistent execution), then weaving these capabilities together and orchestrating them to create outcomes neither could achieve alone.

D: Develop Deliberate Systems of Practice: The atrophy studies show that skills not minded and unused are skills lost. On the other hand the amplification research reveals that skills challenged by AI partnership grow stronger. The key is using AI as a sparring partner, not a substitute, and doing it consistently. Every AI interaction should also make you better at your craft, not just more dependent on the machine. But it should also teach the AI more about how to best help you.

The Personal M.E.L.D.: Highlighting the Human Premium

As AI capabilities expand, a counterintuitive truth emerges: human qualities become more valuable, not less. The research reveals that success lies not in competing with AI but in architecting a partnership that amplifies distinctly human capabilities.

Using the MELD framework, individuals can build sustainable amplification strategies:

M: Maintain Cognitive Friction

Removing all difficulty removes all growth. For individuals, this means deliberately preserving challenges that build irreplaceable human capabilities:

Preserve These Friction Points:

- Ask AI to Critique or Guide, not Answer Directly: Use the socratic method and have AI question you or prompt you to come to your own answers.

- Cultural Intuition: Read rooms without AI sentiment analysis. Decode humor, irony, and unspoken dynamics through direct observation.

- Ethical Reasoning: Wrestle with moral ambiguity personally before consulting AI frameworks. Own your values-based decisions.

- Creative Leaps: Start with blank pages, not AI suggestions. Force radical perspectives and departures before and after iterating with AI assistance.

- Emotional Resonance: Build authentic connections without AI-mediated communication. Practice vulnerability and deep listening.

The 90/10 Rule: Freely use AI for up to 90% efficiency gains, but zealously protect 10% for pure human practice. Choose your 10% based on your core value proposition—what makes you irreplaceable.

E: Evolve Shared Understanding

Success requires developing clear mental models of how you and AI can successfully build mutual context and collaboration styles.

Focus on building the communication and context layer:

- Developing shared vocabulary and communication patterns with AI

- Building context persistence (teaching AI your preferences, style, goals)

- Creating feedback loops that improve mutual understanding

- Learning AI's limitations and failure modes through experience

- Establishing "working agreements" about roles and boundaries

Personal Level Examples:

- Weekly practice of explaining your reasoning to AI and asking it to reflect

- Building custom prompts and workflows that capture your working style

- Creating context documents that help AI understand your domain

- Regular "misalignment checks" documenting where AI misunderstood you

L: Layer Complementary Capabilities

The most successful individuals don't flip-flop or start-stop, but weave and orchestrate human-AI collaboration like a symphony.

Table 6: Example Strategic Layering Patterns

| Task Category | Your Unique Value | AI's Value | Optimal Layering |

|---|---|---|---|

| Creative Work | Vision, taste, cultural intuition | Iteration, exploration, execution | Human conception → AI expansion → Human curation |

| Analysis | Context, judgment, ethics | Pattern recognition, computation | AI processing → Human interpretation → AI validation |

| Communication | Empathy, nuance, authenticity | Structure, consistency, reach | Human core message → AI drafting → Human personalization |

| Learning | Curiosity, connection, wisdom | Information access, practice, feedback | Human questions → AI resources → Human synthesis |

| Research | Source discernment, insight extraction | Broad scanning, rapid synthesis | AI scan → Human source check → AI synthesis → Human insight |

| Writing | Original perspective, voice, tone | Exploratory drafting, polishing | Human idea → AI explore → Human voice → AI polish → Human approve |

| Problem-Solving | Problem framing, creative leaps | Option generation, validation | Human frame → AI generate → Human leap → AI validate |

| Decision-Making | Context, ethics, stakeholder judgment | Scenario modeling, data summarizing | AI gather → Human weigh → AI model → Human decide |

D: Develop Through Deliberate Practice

Fixing Human-AI collaboration will never be a one stop solution. Build a deliberate practice regime that uses AI to accelerate learning while maintaining core capabilities:

Table 7: Periodical Practices

| Cadence | Practice |

|---|---|

| Daily Practices | Write morning pages or first drafts without AI to maintain idea fluency |

| Challenge AI suggestions from three perspectives to build critical thinking | |

| Explain one complex idea without digital assistance to preserve clarity | |

| Weekly Challenges | Analog Friday: solve one significant problem without AI |

| Teach someone else a skill: learning solidifies through teaching | |

| Create something AI wouldn't: maintain creative sensibility | |

| Monthly Growth | Learn a new skill or capacity AI now enables (e.g., data visualization, coding) |

| Strengthen one human skill AI can't replace (e.g., negotiation, leadership) | |

| Document your learning, both gains and losses | |

| Quarterly Evolution | Conduct formal capability assessment using an audit framework |

| Adjust your 90/10 balance based on skill trajectory | |

| Set learning goals that leverage AI while building human edge |

The Individual's Cognitive Immune System

Just as athletes need systems for recovery, individuals need personal protocols to maintain cognitive health:

Warning Signs of Atrophy:

- Can't start without AI input

- Accepting AI outputs without question

- Losing confidence in independent judgment

- Settling for homogenized and flat creative output

Strengthening Protocols:

- Regular "AI fasts" to maintain independence

- Deliberate cognitive effort with AI critiquing and guiding

- Continuous learning in both AI capabilities and human skills

- Building networks that value human insight over efficiency

The individuals who thrive won't be those who resist AI or surrender to it, but those who use the MELD framework to architect a partnership that makes them more capable, more creative, and more irreplaceably human with each interaction.

The Collective M.E.L.D.: Building to Organizational Capability

The research makes clear that individual choice alone isn't sufficient. Organizations must architect environments that promote amplification over atrophy. This isn't about policy documents or training programs. It's about fundamental redesign of how work works.

The most successful organizations are creating what we might call Human-AI Liquid Operating Systems (HALOS): infrastructures that ensure every AI implementation enhances rather than erodes human capability. Built on the MELD framework, these systems transform abstract principles into concrete organizational practices:

M: Maintain Cognitive Friction

Organizations must deliberately preserve the productive struggle that builds capability. Just as top athletic teams need training to build collective strength and acuity, teams need intellectual challenges to maintain their edge. This means architecting workflows that use AI for amplification while protecting the cognitive work that keeps human judgment sharp.

- Design workflow ecosystems where AI handles exploration and execution but humans own strategy, creative direction and major decisions

- Mandate "AI-free zones" for certain critical thinking tasks: strategy sessions, creative brainstorms, ethical decisions

- Build in deliberate inefficiencies that force human judgment: review cycles, debate requirements, alternative solution mandates

- Create "friction checkpoints" where teams must justify decisions without AI-generated rationales

E: Evolve Shared Understanding

Organizations must cultivate bidirectional understanding between humans and AI systems, not just human awareness of AI. This means building persistent context, shared references, and mutual "language" that allows AI to understand organizational goals, culture, and nuances while humans learn to communicate effectively with AI: a genuine collaborative intelligence.

- Build organizational knowledge bases and context documentation that AI can reference to understand company culture, values, and goals

- Create persistent AI "memory systems" through feedback loops where AI learns from corrections and past interactions

- Develop standardized prompt libraries and communication protocols that encode organizational thinking patterns

- Establish regular calibration sessions where teams and AI review past collaborations to improve mutual understanding

- Design "understanding checkpoints" where humans verify AI has grasped key concepts before proceeding with critical work

L: Layer Complementary Capabilities

The real work is designing systems where human and AI capabilities interweave seamlessly. This moves beyond theory into the practical orchestration of hybrid workflows. It's about creating operational structures that leverage the best of both human intuition and machine processing.

- Restructure responsibilities based on human-AI comparative advantages

- Design specific handoff protocols and integration points in workflows

- Create hybrid team structures with clear human and AI roles

- Build review processes that combine AI analysis with human judgment

- Develop metrics that reward human-AI collaborative outcomes

- Conduct augmentation mapping for each department and function

D: Develop Through Deliberate Practice

Organizational learning requires structured practice, not just passive adoption. Like any capability, human-AI collaboration improves through intentional repetition, reflection, and refinement. This means building development and change management systems that strengthen human, AI and human-AI collaborative skills over time.

- Institute Human-AI achievement reviews to audit successes and failures

- Create rotation programs between AI-assisted and manual work

- Build skill development plans that parallel AI implementations

- Establish sparring sessions where teams practice with and without AI

- Reward staff who identify AI limitations and develop workarounds

- Design progression paths that increase collaborative sophistication

The shift requires also rethinking how we measure success itself. Some ideas on how the KPIs of a thriving Human-AI symbiotic organization might shift.

Table 7: The KPI Revolution—From Efficiency to Amplification

| Dimension | Traditional KPIs (Human vs AI) | Amplification KPIs (Human × AI) |

|---|---|---|

| Productivity | Output per hour | Capability growth rate per employee |

| Quality | Error reduction rate | Innovation index (novel solutions generated) |

| Efficiency | Time to task completion | Time freed for higher-order thinking |

| Workforce | Headcount reduction | Cognitive diversity score |

| Learning | Training hours completed | Skills amplified through AI partnership |

| Performance | Tasks automated | Human judgment calls improved by AI |

| Value Creation | Cost savings from AI | New revenue from AI-amplified capabilities |

Organizations tracking these amplification metrics report fundamentally different outcomes. Rather than racing toward automation, they're building competitive moats through enhanced human capability. They measure not just what AI does instead of humans, but what humans can now do because of AI.

These organizations understand a crucial insight: in a world where every competitor has access to the same AI tools, the only sustainable advantage is the amplified capability of your people.

The metrics become self-fulfilling prophecies: measure headcount reduction, and you'll optimize for it. Measure capability amplification, and you'll build an unstoppable workforce.

The Path Forward: Choosing Amplification

The research reveals three critical insights for navigating the AI transformation:

First, passive adoption guarantees atrophy. The default mode of AI integration—seeking maximum efficiency and minimum friction—leads inevitably to cognitive decline. Active, intentional engagement is required to achieve amplification.

Second, the window for choice is relatively narrow. The efficiency trap research shows how quickly dependency becomes irreversible. Organizations and individuals must make conscious choices about their AI relationships now, before market pressures and productivity expectations lock them into patterns of decline.

Third, amplification is achievable at scale. The Harvard, BCG, and co-creation studies prove that human-AI partnerships can consistently enhance rather than erode capability. But this requires deliberate design, not default adoption.

Conclusion: The Amplification Advantage

We stand at a genuine inflection point. Not the one the headlines proclaim, where humans become obsolete, but a subtler, more profound transformation. We're choosing between two futures: one where we become increasingly dependent on and diminished by our tools, and another where we use those same tools to become more capable than ever before.

The evidence is emerging. The path is marked. The choice is ours.

Organizations that commit to amplification will build workforces that grow more capable with each passing day. Those that default to efficiency alone will watch their human capital slowly erode, until they're left with workers who can operate the machinery but can't innovate beyond it.

Individuals and organizations who approach AI as a cognitive sparring partner will develop capabilities that compound over time.

Those who use it as a cognitive substitute will find themselves progressively less able to think without it.

This is about neither techno-optimism nor luddite resistance. It's pragmatic recognition of what research reveals: we get the AI relationship we design.

The cognitive reckoning is here. The question isn't whether AI will change how we think. It's whether we'll direct that change toward amplification or allow it to drift toward atrophy. Both futures are possible.

In the end, the battle for human cognitive sovereignty won't be won by those who resist AI or surrender to it, but by those who transform it into history's greatest amplifier of human potential. The tools are shaping up. Early frameworks exist.

The question that remains: Will you choose amplification or atrophy?

Key Studies Reference List

Primary Neurological and Cognitive Studies

Kosmyna, N., et al. (2025). "Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task." MIT Media Lab. Preprint on arXiv.

https://arxiv.org/abs/2506.08872

Note: Preprint, not yet peer-reviewed. Shows 52-67% reduction in brain connectivity with ChatGPT use.

Gerlich, M. (2025). "AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking." Societies, 15(1), 6.

https://doi.org/10.3390/soc15010006

666 participants; strong correlations between AI use, cognitive offloading, and reduced critical thinking.

Lee, H., et al. (2025). "The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers." Microsoft Research & Carnegie Mellon University. CHI Conference proceedings.

https://www.microsoft.com/en-us/research/wp-content/uploads/2025/01/lee_2025_ai_critical_thinking_survey.pdf

319 knowledge workers; documents algorithmic deference and confidence paradox.

Ganuthula, V.R.R. (2024). "The Paradox of Augmentation: A Theoretical Model of AI-Induced Skill Atrophy." SSRN Electronic Journal.

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4974044

Mathematical framework for skill building vs. atrophy with varying AI assistance levels.

Productivity and Performance Studies

Dell'Acqua, F., et al. (2023). "Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality." Harvard Business School Working Paper, No. 24-013.

https://ssrn.com/abstract=4573321

758 BCG consultants; 25.1% faster, 40% quality improvement, 12.2% more tasks completed.

McKinney, S.M., et al. (2020). "International evaluation of an AI system for breast cancer screening." Nature, 577, 89–94.

https://doi.org/10.1038/s41586-019-1799-6

This landmark study from Google Health showed that an AI system was as effective as expert radiologists in detecting breast cancer from mammograms. Crucially, in a simulation where the AI was used to assist human experts, it reduced the overall workload of the second reader by 88%.

Peng, S., et al. (2023). "The Impact of AI on Developer Productivity: Evidence from GitHub Copilot" SSRN Electronic Journal.

https://arxiv.org/abs/2302.06590

Large-scale study showing developers using GitHub Copilot were 55% faster, a key example of AI accelerating professional velocity.

Noy, S., & Zhang, W. (2023). "Experimental evidence on the productivity effects of generative artificial intelligence" Science, 381(6655).

https://www.science.org/doi/10.1126/science.adh2586

Found that mid-level professionals using ChatGPT for writing tasks were 40% faster and produced higher quality work.

Educational Impact Studies

Kestin, G., Miller, K., Klales, A., et al. (2025). "AI tutoring outperforms in-class active learning: an RCT introducing a novel research-based design in an authentic educational setting." Scientific Reports, 15, 17458.

https://doi.org/10.1038/s41598-025-97652-6

194 Harvard students; AI tutoring produced 2x+ learning gains in less time with higher engagement.

Creativity and Innovation Studies

Doshi, A.R., & Hauser, O.P. (2024). "Generative AI enhances individual creativity but reduces the collective diversity of novel content." Science Advances, 10(28).

https://doi.org/10.1126/sciadv.adn5290

Individual creativity +8-9%, collective diversity -41%.

Habib, K., & Vogel, T. (2024). "How does generative artificial intelligence impact student creativity?" Journal of Creativity, 34(1).

https://www.sciencedirect.com/science/article/pii/S2713374523000316

Alternative Uses Test showed 26-38% improvements across creativity metrics.

Haase, J., & Pokutta, S. (2024). "Human-AI Co-Creativity: Exploring Synergies Across Levels of Creative Collaboration." Weizenbaum Institute and Humboldt University Berlin.

https://arxiv.org/abs/2411.12527

Four-level model of human-AI creative partnership from passive tool to true co-creator.

Systematic Reviews and Meta-Analyses

Vaccaro, M., et al. (2024). "When combinations of humans and AI are useful: A systematic review and meta-analysis." Nature Human Behaviour, 8(1), 15-29.

https://www.nature.com/articles/s41562-024-02024-1

Comprehensive analysis of human-AI collaboration effectiveness across domains.

Key Supporting Studies

Huang, Y., et al. (2019). "Human-AI Co-Learning for Data-Driven AI." National Taiwan University.

https://arxiv.org/pdf/1910.12544

Foundational framework for mutual learning between humans and AI systems.

Microsoft Work Trend Index (2024). "AI at Work Is Here. Now Comes the Hard Part." Microsoft WorkLab.

https://www.microsoft.com/en-us/worklab/work-trend-index/ai-at-work-is-here-now-comes-the-hard-part

Large-scale industry data on AI adoption and workplace transformation.